Audio Track

This document aims to introduce the functionalities, implementation principles, and operational guidelines of Audio Track, helping application layer developers better to achieve specific application functionalities based on Audio Track.

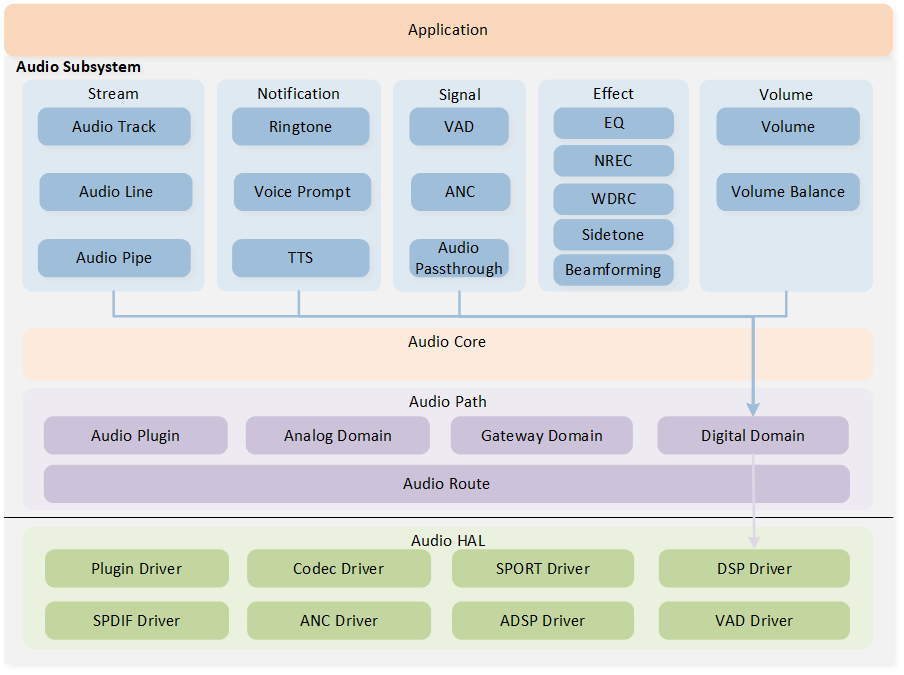

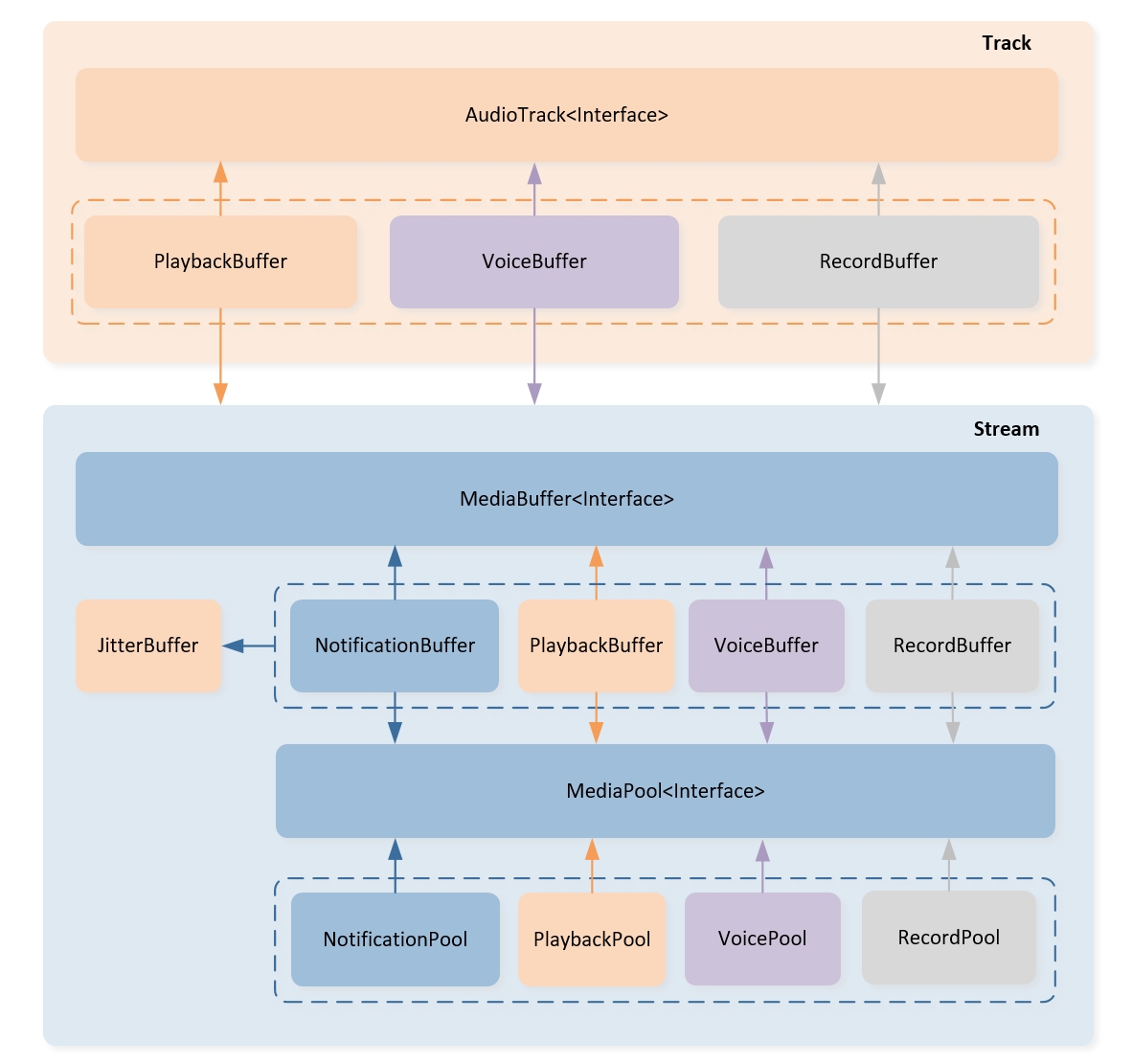

As a high-level functional module within the Audio Subsystem, Audio Track provides a series of APIs to handle Playback, Voice Communication, and Record streams. The Playback stream refers to music or multimedia audio. The Voice Communication stream refers to various bidirectional speeches transmitted through VoIP, cellular calls, and other mediums. The Record stream is used for speech recognition or data capturing. The modules involved in Audio Track are marked in gray in the architecture diagram below, including Audio Track, Volume, Audio Latency, Media Buffer, and Audio Remote.

Audio Subsystem Architecture

Overview

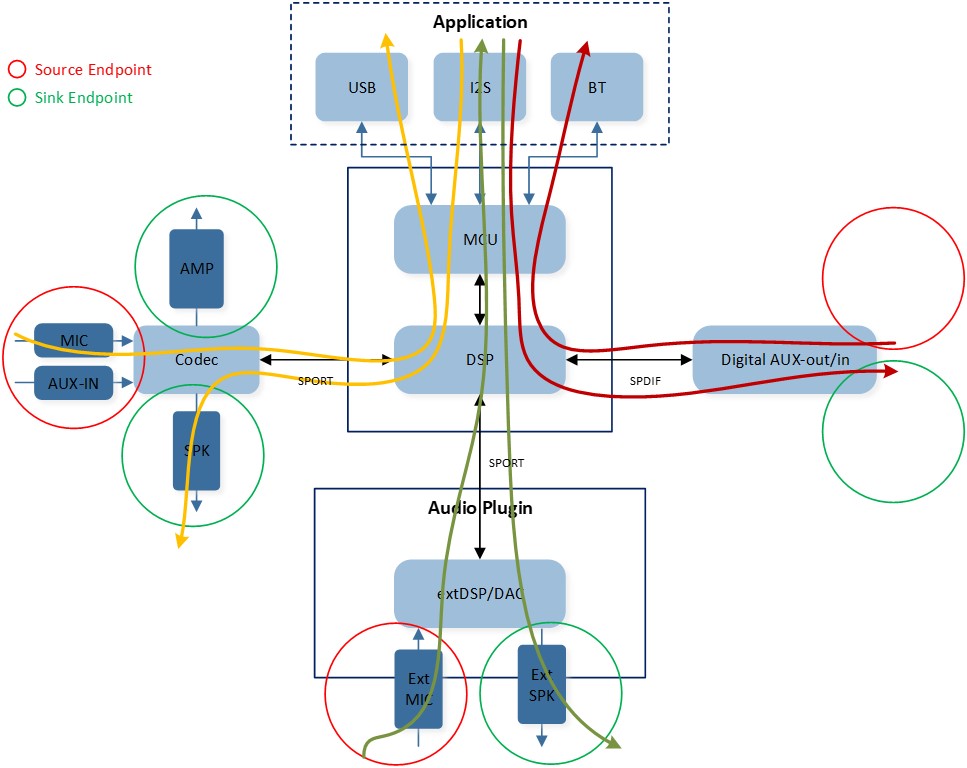

The below figure illustrates an overview of the Audio Track across different modules. A Playback Audio Track transfers the dedicated stream from the application layer to the local output Peripherals. A Record Audio Track transfers the dedicated stream from the local input Peripherals to the application layer. A Voice Audio Track functions as a combination of the Playback Audio Track and the Record Audio Track, but with a different stream type.

Audio Track Overview

The table below summarizes the Audio Track Endpoints for different stream types. Applications shall select the appropriate stream type to create an Audio Track instance. Not limited to just USB, I2S, and Bluetooth scenarios, Audio Track can also assist the application layer in achieving audio upstream and downstream based on transmission methods such as UART, SPI, I2C, etc.

Type |

Value |

Source Endpoint |

Sink Endpoint |

|---|---|---|---|

AUDIO_STREAM_TYPE_PLAYBACK |

0x00 |

Application |

Output Peripherals |

AUDIO_STREAM_TYPE_VOICE |

0x01 |

Application (Decoder) / Input Peripherals (Encoder) |

Output Peripherals (Decoder) / Application (Decoder) |

AUDIO_STREAM_TYPE_RECORD |

0x02 |

Input Peripherals |

Application |

The table below lists the codec types supported by the Audio Track.

Type |

Value |

|---|---|

AUDIO_FORMAT_TYPE_PCM |

0x00 |

AUDIO_FORMAT_TYPE_CVSD |

0x01 |

AUDIO_FORMAT_TYPE_MSBC |

0x02 |

AUDIO_FORMAT_TYPE_SBC |

0x03 |

AUDIO_FORMAT_TYPE_AAC |

0x04 |

AUDIO_FORMAT_TYPE_OPUS |

0x05 |

AUDIO_FORMAT_TYPE_FLAC |

0x06 |

AUDIO_FORMAT_TYPE_MP3 |

0x07 |

AUDIO_FORMAT_TYPE_LC3 |

0x08 |

AUDIO_FORMAT_TYPE_LDAC |

0x09 |

AUDIO_FORMAT_TYPE_LHDC |

0x0A |

AUDIO_FORMAT_TYPE_G729 |

0x0B |

AUDIO_FORMAT_TYPE_LC3PLUS |

0x0C |

Implementation

The implementation of Audio Track is mainly used to introduce the Audio Track modeling, Audio Track lifecycle, and features of Audio Track.

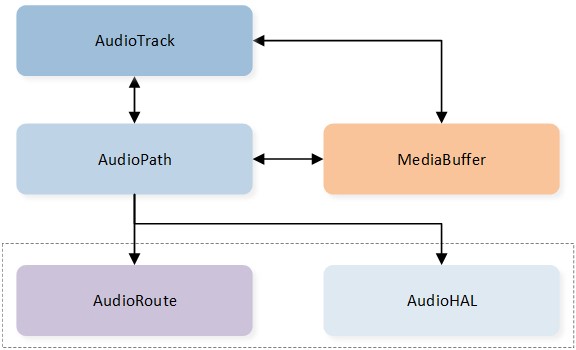

Track Modeling

The Audio Track is designed to be based on the Audio Path component and bundled with a Media Buffer instance. As shown in the below figure, the Audio Track utilizes the Audio Path to configure and control the underlying hardware routings, ensuring synchronization between the state of the Audio Track and the Audio Path. Additionally, the Audio Track creates a Media Buffer instance for each stream direction. The Media Buffer instance is responsible for storing stream data for decoding and encoding purposes and provides a set of jitter handling operations for the Audio Track.

Audio Track Modeling

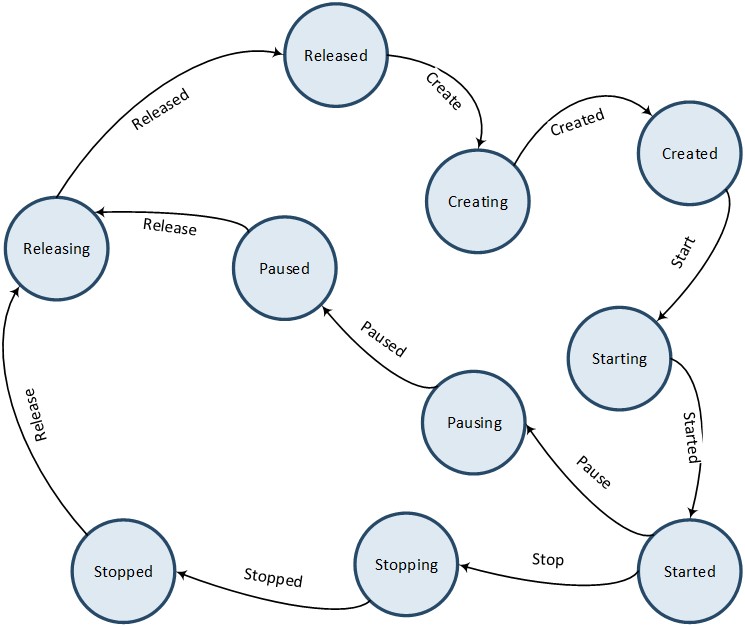

Track Lifecycle

Each instance of Audio Track has its own lifecycle, which can be defined by the following states: Released, Creating, Created, Starting, Started, Stopping, Stopped, Pausing, Paused, Restarting and Releasing. The definitions of these states are provided in the below table.

State |

Description |

|---|---|

Released |

Track instance not existed or destroyed already. |

Creating |

Track instance under creating transient state. |

Created |

Track instance created and statical resources allocated. |

Starting |

Track instance under starting transient state. |

Started |

Track instance started and underlying hardware enabled. |

Stopping |

Track instance under stopping transient state. |

Stopped |

Track instance stopped and underlying hardware disabled. |

Pausing |

Track instance under pausing transient state. |

Paused |

Track instance paused and underlying hardware disabled. |

Restarting |

Track instance under restarting transient state. |

Releasing |

Track instance under releasing transient state. |

The below figure illustrates the state transitions for an Audio Track. When an instance of Audio Track is created, it initially enters the Released state as a temporary placeholder and then transitions directly to the Creating state. Once the creation process of the Audio Track instance is completed, it enters the Created state. From the Created state, the Audio Track instance can either be released directly, transitioning from the Releasing state to the Released state, or it can be started on demand, transitioning from the Starting state to the Started state. An active instance of Audio Track can also be stopped on demand, transitioning from the Stopping state to the Stopped state.

Note

The pause action terminates the Audio Track until the stream in the binding Media Buffer is drained. On the other hand, the stop action terminates the Audio Track directly.

The restart action combines the stop and start actions. If the Audio Track is active, it transitions from the Stopping state to the Stopped state, and then from the Starting to the Started state. If the Audio Track is already stopped or has not been started yet, it will be started directly, transitioning from the Starting state to the Started state.

Audio Track Lifecycle

Obviously, it is difficult for the application layer to perform the required actions in the appropriate Audio Track state. We have summarized all the permitted/prohibited/pending operations for each state in the table below for application layer developers to reference.

audio_track_create |

audio_track_start |

audio_track_restart |

audio_track_stop |

audio_track_pause |

audio_track_release |

|

|---|---|---|---|---|---|---|

Released |

Permitted |

Prohibited |

Prohibited |

Prohibited |

Prohibited |

Prohibited |

Creating |

Prohibited |

Pending |

Pending |

Prohibited |

Prohibited |

Permitted |

Created |

Prohibited |

Permitted |

Permitted |

Prohibited |

Prohibited |

Permitted |

Starting |

Prohibited |

Permitted |

Permitted |

Permitted |

Permitted |

Permitted |

Started |

Prohibited |

Prohibited |

Permitted |

Permitted |

Permitted |

Permitted |

Stopping |

Prohibited |

Permitted |

Permitted |

Permitted |

Permitted |

Permitted |

Stopped |

Prohibited |

Permitted |

Permitted |

Prohibited |

Prohibited |

Permitted |

Pausing |

Prohibited |

Permitted |

Permitted |

Permitted |

Permitted |

Permitted |

Paused |

Prohibited |

Permitted |

Permitted |

Permitted |

Prohibited |

Permitted |

Restarting |

Prohibited |

Permitted |

Permitted |

Permitted |

Permitted |

Permitted |

Releasing |

Prohibited |

Prohibited |

Prohibited |

Prohibited |

Prohibited |

Permitted |

Media Buffer

As a part of the Audio Track, Media Buffer plays a crucial role in buffering the Playback, Voice, and Record stream data, and acts as a bridge between the Audio Track and the Media Pool. Additionally, we developed Jitter Buffer that is attached to the Media Buffer to handle dejittering.

Media Buffer Overview

Audio Latency

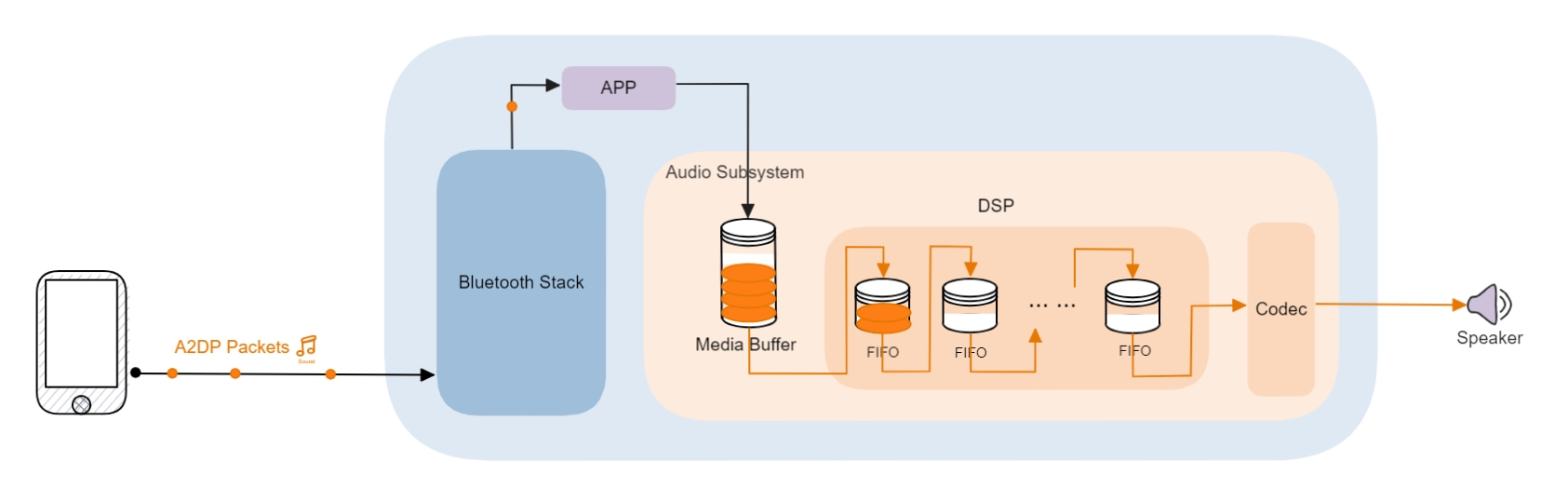

Audio Latency is an important performance in audio playing, determining how long it takes for the SNK to play back the audio data from the SRC. The figure below uses BR/EDR as an example to illustrate how the audio packets are processed.

A2DP Stream Flow

As shown in the diagram, after A2DP packets from the phone are received by the Bluetooth Stack, they are transparently transmitted to the APP. The application is responsible for writing the data into Audio Track. Depending on the latency settings and the Playback status of the DSP, Audio Track can either buffer the data in the media buffer or send the data to the DSP. The digital audio signal is processed by the DSP and then converted from digital to analog by the Codec, finally being played through the speaker as high-quality audio.

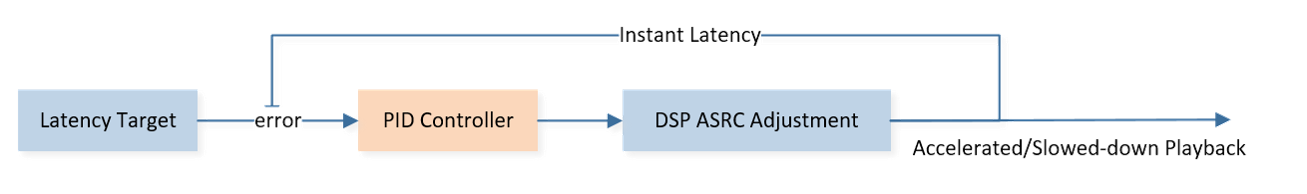

Jitter Buffer

Jitter Buffer is designed specifically for dejittering and is activated only when the stream type is Playback. We have developed a PID¹ Closed-Loop Control System based on ASRC to achieve dejittering of audio data streams without impacting the listening experience.

Jitter Buffer PID Controller

True Wireless Stereo

Audio Track, based on the capabilities of First Synchronization, Seamless Join and Periodic Synchronization, can help you to achieve full TWS functionality in both BR/EDR and LEA easily.

TWS Playing

Audio Track Parameters

Note

The application should create an Audio Track with correct parameters, or the Audio Track will not work as expected.

Stream Type

Audio Track provides three stream types to handle Playback, Voice, and Record stream.

typedef enum t_audio_stream_type

{

AUDIO_STREAM_TYPE_PLAYBACK = 0x00, /* Audio stream for audio Playback */

AUDIO_STREAM_TYPE_VOICE = 0x01, /* Audio stream for voice communication */

AUDIO_STREAM_TYPE_RECORD = 0x02, /* Audio stream for recognition or capture */

} T_AUDIO_STREAM_TYPE;

Stream Mode

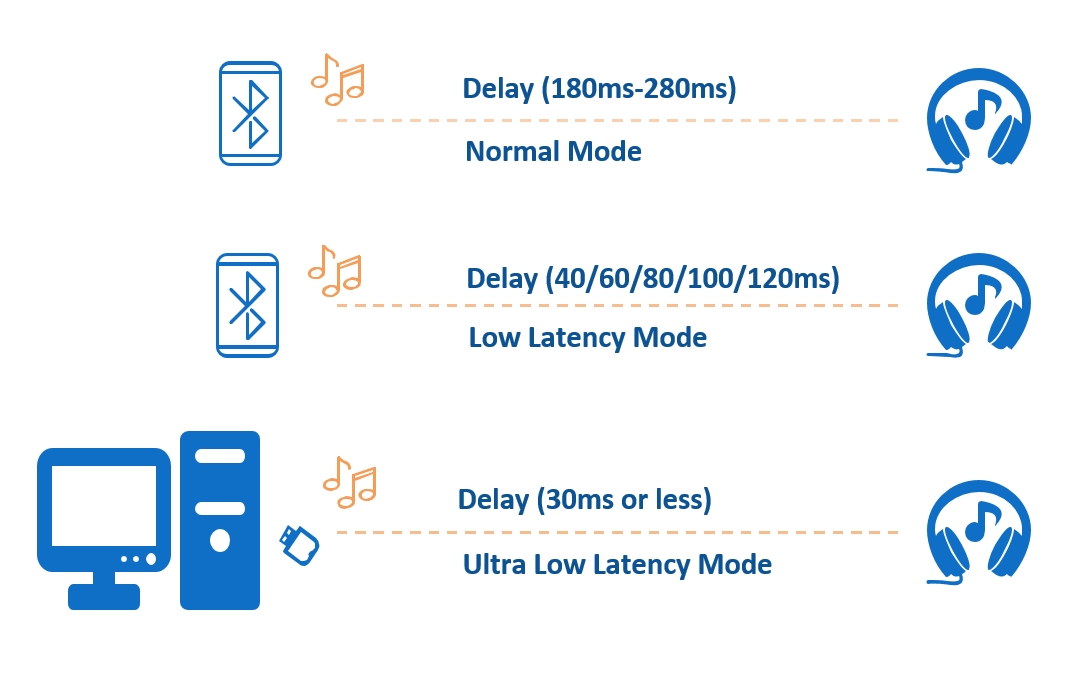

The application layer should select the appropriate Stream mode for the Playback Audio Track based on the latency needs and the application scenarios.

typedef enum t_audio_stream_mode

{

AUDIO_STREAM_MODE_NORMAL = 0x00, /* Audio stream normal mode */

AUDIO_STREAM_MODE_LOW_LATENCY = 0x01, /* Audio stream low-latency mode */

AUDIO_STREAM_MODE_HIGH_STABILITY = 0x02, /* Audio stream high-stability mode */

AUDIO_STREAM_MODE_DIRECT = 0x03, /* Audio stream direct mode */

AUDIO_STREAM_MODE_ULTRA_LOW_LATENCY = 0x04, /* Audio stream ultra low-latency mode */

} T_AUDIO_STREAM_MODE;

Note

AUDIO_STREAM_MODE_HIGH_STABILITY is currently deprecated.

AUDIO_STREAM_MODE_NORMAL, which supports a latency between 180ms and 280ms. This mode is more advantageous for stable Playback of BR/EDR audio sources in interference environments. The greater the latency, the more data is buffered, which helps to resist interference.AUDIO_STREAM_MODE_LOW_LATENCY, which has five levels of low latency corresponding to 40ms, 60ms, 80ms, 100ms, and 120ms. This mode is more advantageous for providing a low latency audio experience in BR/EDR gaming scenarios.AUDIO_STREAM_MODE_ULTRA_LOW_LATENCYis used only when the Dongle are connected via BR/EDR, achieving end-to-end latency of less than 30ms.AUDIO_STREAM_MODE_DIRECTis currently used only for LE Audio. Its latency depends on the Presentation Delay setting, and when used with a Dongle, the end-to-end latency can be below 20ms.

Audio Stream Mode

Stream Usage

The Stream Usage determines whether the Audio Track needs to interact between SRC to achieve synchronized Playback.

AUDIO_STREAM_USAGE_LOCALdenotes that the audio source originates locally, encompassing data stored in flash memory or transmitted via interfaces such as I2S, UART, or USB. This classification enables the audio stream to be processed and played independently of external resources.AUDIO_STREAM_USAGE_RELAYis currently deprecated.AUDIO_STREAM_USAGE_SNOOPis particularly prevalent in wireless audio transmission scenarios, including Bluetooth, Wi-Fi and 2.4G, and indicates that there is a SRC to transmit audio data to one SNK, and occasionally another SNK is used for simultaneous Playback.

typedef enum t_audio_stream_usage

{

AUDIO_STREAM_USAGE_LOCAL = 0x00, /* Audio Stream local usage */

AUDIO_STREAM_USAGE_RELAY = 0x01, /* Audio Stream remote relay usage */

AUDIO_STREAM_USAGE_SNOOP = 0x02, /* Audio Stream remote snoop usage */

} T_AUDIO_STREAM_USAGE;

Format Infomation

The format information specifies how the DSP should decode/encode the stream data. The frame number is a predefined value that estimates the maximum memory required.

typedef struct t_audio_format_info

{

T_AUDIO_FORMAT_TYPE type; /* Audio format type defined in \ref T_AUDIO_FORMAT_TYPE */

uint8_t frame_num; /* Preset frame number */

union

{

T_AUDIO_PCM_ATTR pcm; /* Audio PCM format attribute */

T_AUDIO_CVSD_ATTR cvsd; /* Audio CVSD format attribute */

T_AUDIO_MSBC_ATTR msbc; /* Audio mSBC format attribute */

T_AUDIO_SBC_ATTR sbc; /* Audio SBC format attribute */

T_AUDIO_AAC_ATTR aac; /* Audio AAC format attribute */

T_AUDIO_OPUS_ATTR opus; /* Audio OPUS format attribute */

T_AUDIO_FLAC_ATTR flac; /* Audio FLAC format attribute */

T_AUDIO_MP3_ATTR mp3; /* Audio MP3 format attribute */

T_AUDIO_LC3_ATTR lc3; /* Audio LC3 format attribute */

T_AUDIO_LDAC_ATTR ldac; /* Audio LDAC format attribute */

T_AUDIO_LHDC_ATTR lhdc; /* Audio LHDC format attribute */

T_AUDIO_LC3PLUS_ATTR lc3plus; /* Audio LC3plus format attribute */

} attr;

} T_AUDIO_FORMAT_INFO;

Audio Volume

Audio Volume controls the volume of the audio stream.

When creating a Playback, you can only set the

volume_out.When creating a Voice, you must set both the

volume_outand thevolume_in.When creating a Record, you can only set the

volume_in.

Device

We have defined 4 kinds of output devices and 4 kinds of input devices which affect the configuration of Audio Route.

#define AUDIO_DEVICE_OUT_SPK 0x00000001 /* Speaker for audio Playback or voice communication */

#define AUDIO_DEVICE_OUT_AUX 0x00000002 /* Analog AUX-level output */

#define AUDIO_DEVICE_OUT_SPDIF 0x00000004 /* Digital SPDIF output */

#define AUDIO_DEVICE_OUT_USB 0x00000008 /* USB audio out */

#define AUDIO_DEVICE_IN_MIC 0x00010000 /* Microphone for voice communication or record */

#define AUDIO_DEVICE_IN_AUX 0x00020000 /* Analog AUX-level input */

#define AUDIO_DEVICE_IN_SPDIF 0x00040000 /* Digital SPDIF input */

#define AUDIO_DEVICE_IN_USB 0x00080000 /* USB audio in */

Async IO

The application can define its own audio_track_write() or audio_track_read() function if the APIs are

registered through the async_write or async_read interface.

It is worth mentioning that the async APIs cannot utilize the Media Buffer and will require additional

heap memory to buffer data.

Operating Principle

The operating principle of application mainly describes the detailed interaction flow between Audio Track and application.

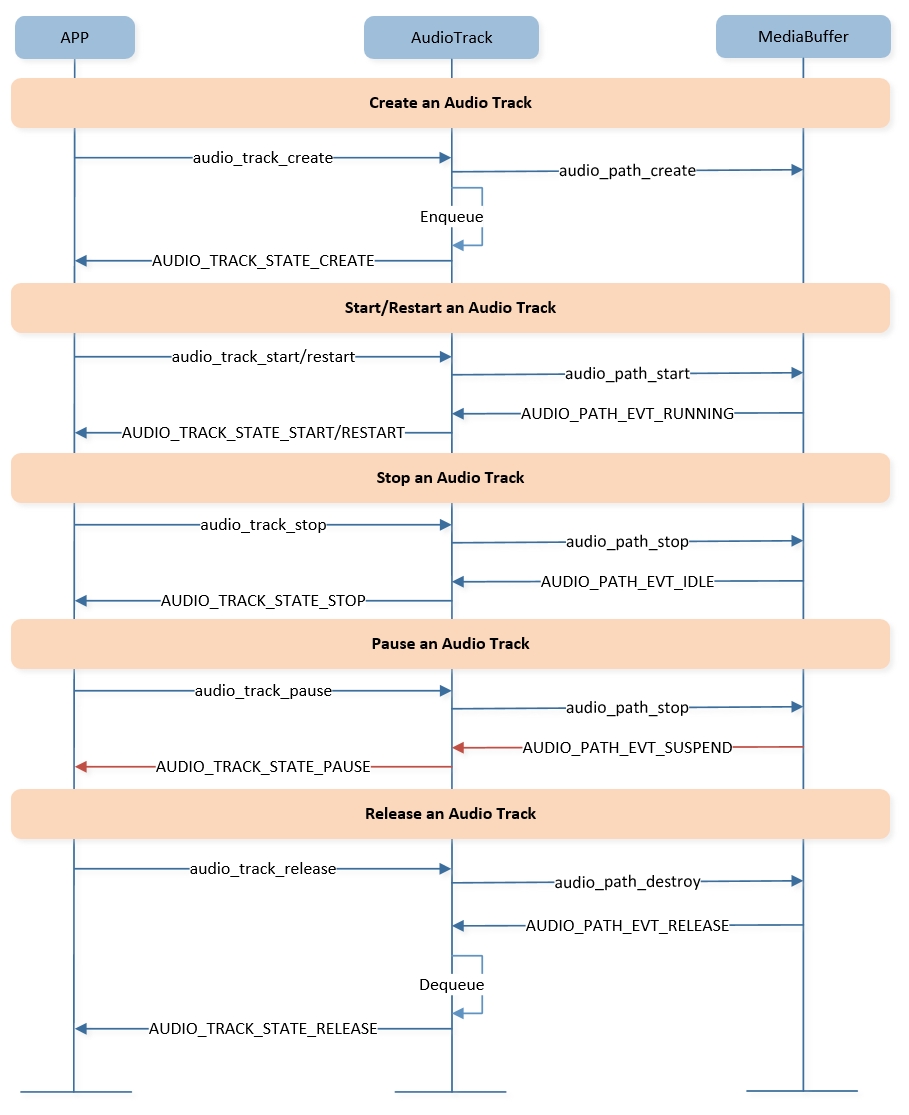

Workflow of Audio Track

The application can call audio_track_create(), audio_track_start(), audio_track_stop(),

audio_track_pause(), audio_track_restart() and audio_track_release() to operate

Audio Track, and will receive the AUDIO_EVENT_TRACK_STATE_CHANGED event when the operation is finished successfully.

Besides, the application can get the state of the Audio Track by calling audio_track_state_get().

typedef struct t_audio_event_param_track_state_changed

{

T_AUDIO_TRACK_HANDLE handle;

T_AUDIO_TRACK_STATE state;

uint8_t cause;

} T_AUDIO_EVENT_PARAM_TRACK_STATE_CHANGED;

typedef enum t_audio_track_state

{

AUDIO_TRACK_STATE_RELEASED = 0x00, /* Audio Track that was released or not created yet. */

AUDIO_TRACK_STATE_CREATED = 0x01, /* Audio Track that was created. */

AUDIO_TRACK_STATE_STARTED = 0x02, /* Audio Track that was started. */

AUDIO_TRACK_STATE_STOPPED = 0x03, /* Audio Track that was stopped. */

AUDIO_TRACK_STATE_PAUSED = 0x04, /* Audio Track that was paused. */

AUDIO_TRACK_STATE_RESTARTED = 0x05, /* Audio Track that was restarted. */

} T_AUDIO_TRACK_STATE;

The following figure illustrates the workflow for operating an Audio Track:

Create an Audio Track: The application begins by calling

audio_track_create()to create an Audio Track. Once the Audio Track is successfully created, the application receives the stateAUDIO_TRACK_STATE_CREATED, and the Audio Track instance is enqueued.Start an Audio Track: The application can then call

audio_track_start()to initiate the Audio Track. It will receiveAUDIO_TRACK_STATE_STARTEDas feedback, indicating that the audio path is running. At this point, the application can start writing or reading the stream data successfully.Stop an Audio Track: The application calls

audio_track_stop(), and if successful, it will receiveAUDIO_TRACK_STATE_STOPPED.Pause an Audio Track: The application can use

audio_track_pause(), which is useful for draining buffer data in local-play scenarios. Currently, its function is similar toaudio_track_stop().Release an Audio Track: The Audio Track can be released using the audio_track_release interface. After the audio path is destroyed, the Audio Track instance will dequeue, and the application will receive

AUDIO_TRACK_STATE_RELEASED. It's essential to release invalid Audio Track instances to prevent them from starting unexpectedly due to queue operations.Restart an Audio Track: The

audio_track_restart()is provided to restart the Audio Track. If the Audio Track is stopped, restarting it is akin to usingaudio_track_start(). However, if it's already started, the restart operation effectively performs the actions of bothaudio_track_stop()andaudio_track_start().

Audio Track Operating Flow

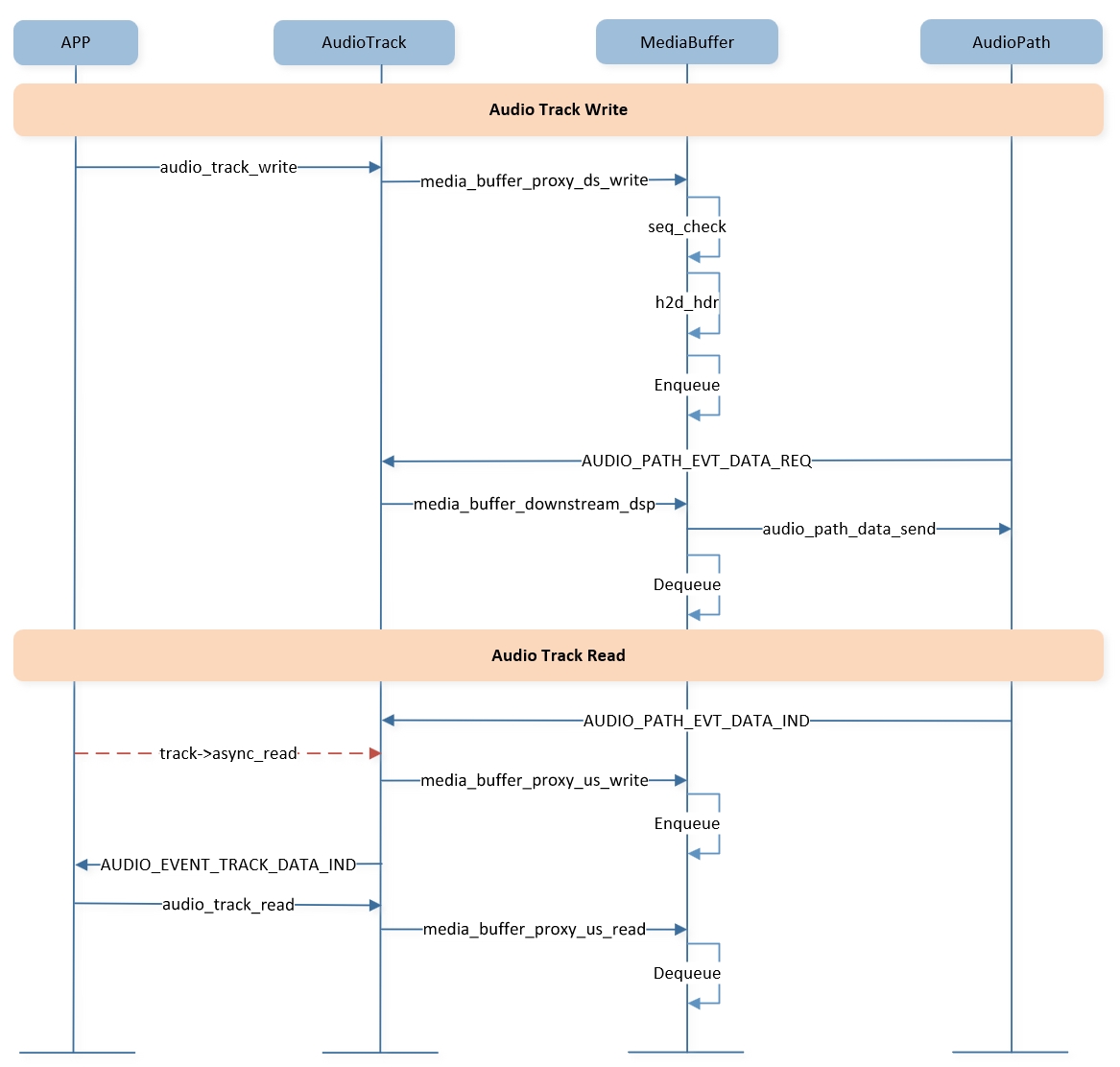

Streamflow of Audio Track

Note

Please remember that the audio stream can be written or read successfully only after the Audio Track is started.

The application can write the stream data to the Audio Track by audio_track_write() and read

the stream data from the Audio Track by audio_track_read(). If the application registered its

own write/read functions during audio_track_create() (i.e., async_write or async_read is not NULL),

it must write or read stream data by its own function registered instead of audio_track_write()

or audio_track_read().

The following figure outlines the streamflow of Audio Track:

-

Audio Track Write:

When the application calls

audio_track_write(), the packet is enqueued into the Media Buffer after verifying the sequence number and attaching an H2D header.Upon receiving

AUDIO_PATH_EVT_DATA_REQ, the Media Buffer will downstream the packet following a FIFO approach.

-

Audio Track Read:

The

AUDIO_PATH_EVT_DATA_INDevent indicates that there is a packet to be read from the DSP.The Media Buffer will first enqueue this packet and notify the application with the event

AUDIO_EVENT_TRACK_DATA_IND.If the

async_readof the Audio Track is NULL, the packet will be dequeued afteraudio_track_read()is completed.

Audio Track Stream Flow

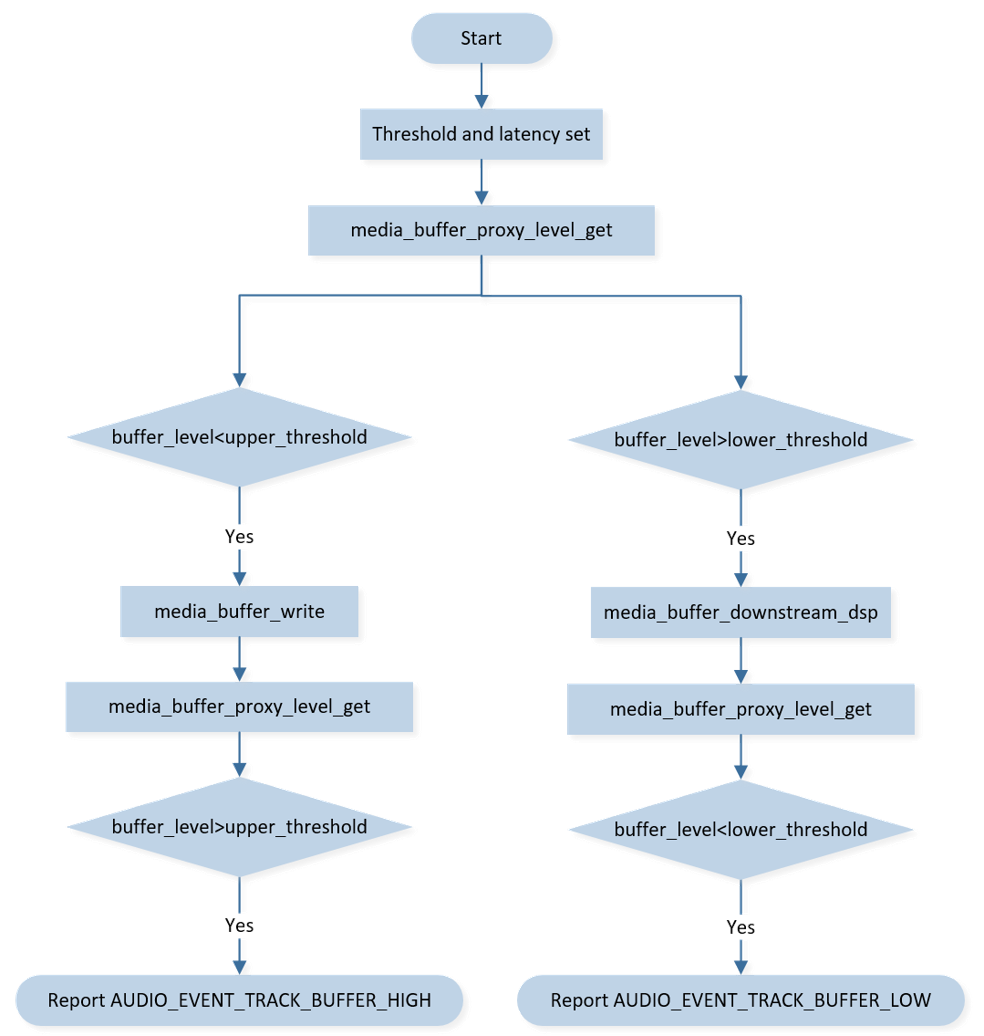

Buffer Controller Implementation

In most scenarios, the SRC transmits audio stream data at the same rate as Playback. However, local Playback lacks such a direct SRC controller, making effective audio buffering crucial for smooth and uninterrupted Playback.

As illustrated in the figure below, by setting upper and lower thresholds, as well as specifying latency

for the Media Buffer, you can ensure that the buffer level is continuously monitored. It is essential

that the application maintains latency within these upper and lower thresholds. If the buffer level falls

below the lower threshold, the application will receive an AUDIO_EVENT_TRACK_BUFFER_LOW event, prompting

it to write sufficient audio stream data to normalize the buffer level. Conversely, if the buffer level

exceeds the upper threshold, the application will receive an AUDIO_EVENT_TRACK_BUFFER_HIGH event,

requiring it to pause writing audio stream data until the buffer level returns to normal.

It's important to note that if the buffer level consistently remains high or low, no additional events will be triggered to notify the application.

Audio Track Buffer Control

API Usage

Here's a structured presentation of the application Programming Interfaces offered by the Audio Track component, along with brief descriptions and usage precautions:

-

The following outlines how to appropriately handle an Audio Track within the A2DP Playback context.

In environments supporting Multilink, the UUID is crucial for uniquely identifying each Audio Track with the same stream type.

Ensure that a unique UUID is assigned to each track during creation to avoid conflicts.

While there is a default latency setting for

AUDIO_STREAM_TYPE_PLAYBACK, it is advisable to explicitly set a specific latency for your Audio Track. This ensures that Playback meets the desired performance criteria, tailored to your application's requirements.After successfully creating and configuring the Audio Track, call

audio_track_start()to begin audio processing.

static void app_audio_bt_cback(T_BT_EVENT event_type, void *event_buf, uint16_t buf_len) { T_BT_EVENT_PARAM *param = event_buf; T_APP_BR_LINK *p_link; p_link = app_find_br_link(param->a2dp_stream_data_ind.bd_addr); switch (event_type) { case BT_EVENT_A2DP_STREAM_START_IND: { T_AUDIO_FORMAT_INFO format_info; T_AUDIO_TRACK_HANDLE audio_track_handle; uint8_t audio_gain_level = 10; uint8_t uuid[8] = {0}; uint16_t latency = 280; format_info.type = AUDIO_FORMAT_TYPE_SBC; format_info.frame_num = 8; format_info.attr.sbc.sample_rate = 44100; format_info.attr.sbc.chann_mode = AUDIO_SBC_CHANNEL_MODE_STEREO; format_info.attr.sbc.chann_location = AUDIO_CHANNEL_LOCATION_FL | AUDIO_CHANNEL_LOCATION_FR; format_info.attr.sbc.block_length = 16; format_info.attr.sbc.subband_num = 8; format_info.attr.sbc.allocation_method = 1; format_info.attr.sbc.bitpool = 53; uuid[0] = "B"; uuid[1] = "T"; memcpy(&uuid[2], &p_link->bd_addr, 6); p_link->a2dp_track_handle = audio_track_create(AUDIO_STREAM_TYPE_PLAYBACK, AUDIO_STREAM_MODE_NORMAL, AUDIO_STREAM_USAGE_SNOOP, format_info, audio_gain_level, 0, AUDIO_DEVICE_OUT_SPK, NULL, NULL); if (p_link->a2dp_track_handle != NULL) { audio_track_uuid_set(p_link->a2dp_track_handle, uuid); audio_track_latency_set(p_link->a2dp_track_handle, latency, true); audio_track_start(p_link->a2dp_track_handle); } } break; case BT_EVENT_A2DP_STREAM_STOP: { audio_track_release(p_link->a2dp_track_handle); } break; case BT_EVENT_A2DP_STREAM_CLOSE: { audio_track_release(p_link->a2dp_track_handle); } break; } }

-

After the Audio Track started, the application can start writing audio stream data to Media Buffer.

static void app_audio_bt_cback(T_BT_EVENT event_type, void *event_buf, uint16_t buf_len) { T_BT_EVENT_PARAM *param = event_buf; bool handle = true; switch (event_type) { case BT_EVENT_A2DP_STREAM_DATA_IND: { uint16_t written_len; T_APP_BR_LINK *p_link; T_AUDIO_STREAM_STATUS status = AUDIO_STREAM_STATUS_CORRECT; p_link = app_find_br_link(param->a2dp_stream_data_ind.bd_addr); if (p_link != NULL) { audio_track_write(p_link->a2dp_track_handle, param->a2dp_stream_data_ind.bt_clock, param->a2dp_stream_data_ind.seq_num, status, param->a2dp_stream_data_ind.frame_num, param->a2dp_stream_data_ind.payload, param->a2dp_stream_data_ind.len, &written_len); } } break; } }

-

The application can get the current volume and adjust the volume of the Audio Track dynamically, mute or unmute the Audio Track.

bool audio_track_volume_out_get(T_AUDIO_TRACK_HANDLE handle, uint8_t *volume); bool audio_track_volume_out_set(T_AUDIO_TRACK_HANDLE handle, uint8_t volume); bool audio_track_volume_out_mute(T_AUDIO_TRACK_HANDLE handle); bool audio_track_volume_out_unmute(T_AUDIO_TRACK_HANDLE handle);

-

It's essential to declare the Audio Track policy before creating any tracks. This declaration will determine whether the application can support multiple Audio Track instances co-existing simultaneously. This decision affects the management of resources and audio data handling capabilities within the application.

typedef enum t_audio_track_policy { AUDIO_TRACK_POLICY_SINGLE_STREAM = 0x00, /* Audio Track single stream policy */ AUDIO_TRACK_POLICY_MULTI_STREAM = 0x01, /* Audio Track multi stream policy */ } T_AUDIO_TRACK_POLICY; bool audio_track_policy_set(T_AUDIO_TRACK_POLICY policy);

-

The following API are crucial for monitoring the buffer level effectively, and usually used in the Playback with

AUDIO_STREAM_USAGE_LOCAL.bool audio_track_threshold_set(T_AUDIO_TRACK_HANDLE handle, uint16_t upper_threshold, uint16_t lower_threshold); bool audio_track_buffer_level_get(T_AUDIO_TRACK_HANDLE handle, uint16_t *level);

Here's an example of how to set the thresholds:

uint16_t upper_threshold = playback_pool_size / packet_size * packet_duration * 0.7; uint16_t lower_threshold = packet_duration * 1; audio_track_threshold_set(handle, upper_threshold, lower_threshold);

If the coder format is

AUDIO_FORMAT_TYPE_PCM, and the format infomation is 480 samples, 16 bit, stereo and the sample rate is 48KHz, the packet_size is 480 * 2 * 2 = 1920 bytes, and the packet_duration is 10ms. If the Playback pool size is 15KB, then theupper_thresholdis 15 * 1024 / 1920 * 10ms * 0.7 = 56ms, and thelower_thresholdis 10ms * 1 = 10ms.

See Also

Please refer to the relevant API Reference: